-

TensorFlow 기초 14 - 선형회귀용 다층 분류모델 - Sequential, Functional api(표준화 및 validation_data)TensorFlow 2022. 12. 1. 15:25

Sequential api : 단순한 네트워크(설계도) 구성

Functional api : Sequential api 방법보다 복잡하고 유연한 네트워크(설계도) 구성

Functional api 2 : 유연한 네트워크(설계도) 구성 - 일부는 짧은 경로, 일부는 긴 경로

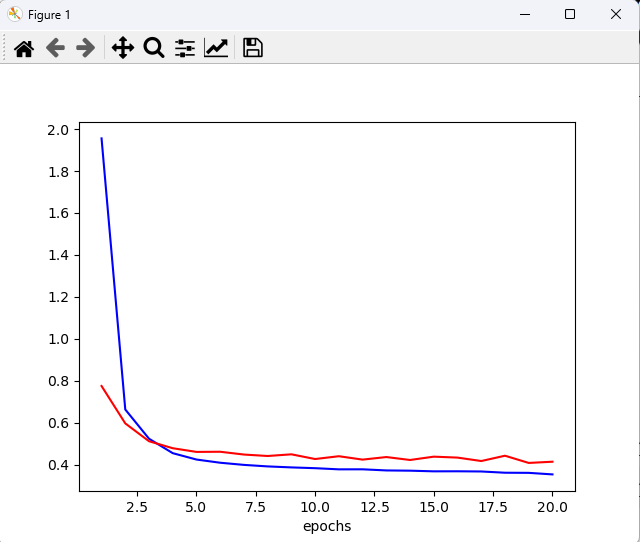

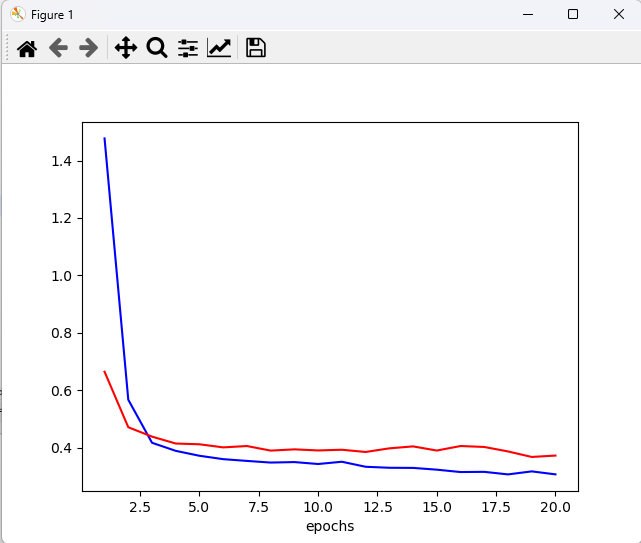

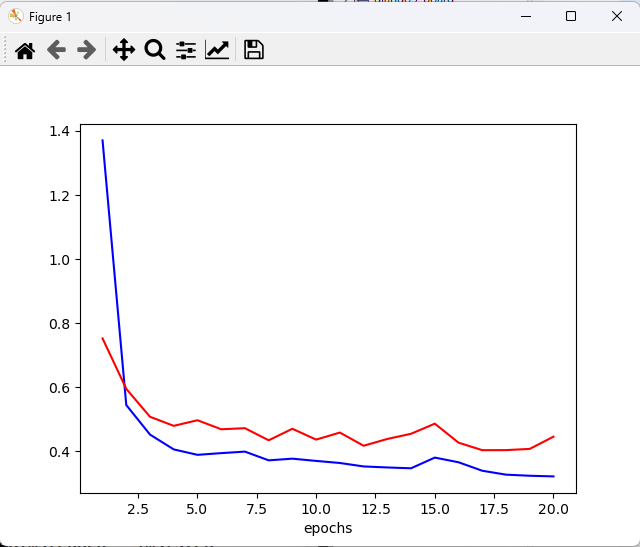

# 선형회귀용 다층 분류모델 - Sequential, Functional api from sklearn.datasets import fetch_california_housing from sklearn.model_selection import train_test_split from keras.models import Sequential from keras.layers import Dense, Input, Concatenate from keras import Model import matplotlib.pyplot as plt from sklearn.preprocessing import StandardScaler housing = fetch_california_housing() print(housing.keys()) print(housing.data[:2]) print(housing.target[:2]) print(housing.feature_names) print(housing.target_names) x_train_all, x_test, y_train_all, y_test = train_test_split(housing.data, housing.target, random_state=12) print(x_train_all.shape, x_test.shape, y_train_all.shape,y_test.shape) # (15480, 8) (5160, 8) (15480,) (5160,) # train의 일부를 validation dataset으로 사용할 목적 x_train, x_valid, y_train, y_valid = train_test_split(x_train_all, y_train_all, random_state=12) print(x_train.shape, x_valid.shape, y_train.shape, y_valid.shape) # (11610, 8) (3870, 8) (11610,) (3870,) # scale 조정 : 표준화( -1 ~ 1) scaler = StandardScaler() x_train = scaler.fit_transform(x_train) x_valid = scaler.fit_transform(x_valid) x_test = scaler.fit_transform(x_test) # scaler.inverse_transform(t_test) print(x_train[:2]) print('Sequential api : 단순한 네트워크(설계도) 구성') model = Sequential() model.add(Dense(units=30, activation='relu', input_shape=x_train.shape[1:])) # (11610, 8) model.add(Dense(units=1)) model.compile(optimizer='adam', loss='mse', metrics=['mse']) history = model.fit(x_train, y_train, epochs=20, batch_size=32, validation_data=(x_valid, y_valid), verbose=2) print('evaluate :', model.evaluate(x_test, y_test, batch_size=32, verbose=0)) # predict x_new = x_test[:3] y_pred = model.predict(x_new) print('예측값 :', y_pred.ravel()) print('실제값 :', y_test[:3]) # 시각화 plt.plot(range(1, 21), history.history['mse'], c='b', label='mse') plt.plot(range(1, 21), history.history['val_mse'], c='r', label='val_mse') plt.xlabel('epochs') plt.show() print('Functional api : 이전 방법보다 복잡하고 유연한 네트워크(설계도) 구성') input_ = Input(shape=x_train.shape[1:]) net1 = Dense(units=30, activation='relu')(input_) net2 = Dense(units=30, activation='relu')(net1) concat = Concatenate()([input_, net2]) # 마지막 은닉층의 출력과 입력층을 연결(Concatenate 층을 만듦) output = Dense(units=1)(concat) # 1개의 노드와 activation function이 없는 출력층을 만들고 Concatenate 층을 사용 model2 = Model(inputs=[input_], outputs=[output]) model2.compile(optimizer='adam', loss='mse', metrics=['mse']) history = model2.fit(x_train, y_train, epochs=20, batch_size=32, validation_data=(x_valid, y_valid), verbose=2) print('evaluate :', model2.evaluate(x_test, y_test, batch_size=32, verbose=0)) # predict x_new = x_test[:3] y_pred = model2.predict(x_new) print('예측값 :', y_pred.ravel()) print('실제값 :', y_test[:3]) # 시각화 plt.plot(range(1, 21), history.history['mse'], c='b', label='mse') plt.plot(range(1, 21), history.history['val_mse'], c='r', label='val_mse') plt.xlabel('epochs') plt.show() print('Functional api 2 : 유연한 네트워크(설계도) 구성 - 일부는 짧은 경로, 일부는 긴 경로') # 여러 개의 입력을 사용 # 예) 5개의 특성(0 ~ 4)은 짧은 경로, 나머지(2 ~ 7)는 긴 경로 input_a = Input(shape=[5], name='wide_input') input_b = Input(shape=[6], name='deep_input') net1 = Dense(units=30, activation='relu')(input_b) net2 = Dense(units=30, activation='relu')(net1) concat = Concatenate()([input_a, net2]) output = Dense(units=1, name='output')(concat) model3 = Model(inputs=[input_a, input_b], outputs=output) model3.compile(optimizer='adam', loss='mse', metrics=['mse']) # fit 처리 시 입력값이 복수 x_train_a, x_train_b = x_train[:, :5], x_train[:, 2:] # train용 x_valid_a, x_valid_b = x_valid[:, :5], x_valid[:, 2:] # train용 x_test_a, x_test_b = x_test[:, :5], x_test[:, 2:] # evaluate용 x_new_a, x_new_b = x_test_a[:3], x_test_b[:3] # predict용 history = model3.fit((x_train_a, x_train_b), y_train, epochs=20, batch_size=32, validation_data=((x_valid_a, x_valid_b), y_valid), verbose=2) print('evaluate :', model3.evaluate((x_test_a, x_test_b), y_test, batch_size=32, verbose=0)) # predict x_new = x_test[:3] y_pred = model3.predict((x_new_a, x_new_b)) print('예측값 :', y_pred.ravel()) print('실제값 :', y_test[:3]) # 시각화 plt.plot(range(1, 21), history.history['mse'], c='b', label='mse') plt.plot(range(1, 21), history.history['val_mse'], c='r', label='val_mse') plt.xlabel('epochs') plt.show() <console> dict_keys(['data', 'target', 'frame', 'target_names', 'feature_names', 'DESCR']) [[ 8.32520000e+00 4.10000000e+01 6.98412698e+00 1.02380952e+00 3.22000000e+02 2.55555556e+00 3.78800000e+01 -1.22230000e+02] [ 8.30140000e+00 2.10000000e+01 6.23813708e+00 9.71880492e-01 2.40100000e+03 2.10984183e+00 3.78600000e+01 -1.22220000e+02]] [4.526 3.585] ['MedInc', 'HouseAge', 'AveRooms', 'AveBedrms', 'Population', 'AveOccup', 'Latitude', 'Longitude'] ['MedHouseVal'] (15480, 8) (5160, 8) (15480,) (5160,) (11610, 8) (3870, 8) (11610,) (3870,) [[-0.10138585 0.73517712 0.05976087 -0.18839989 -0.74579257 -0.01735453 0.97008034 -1.2905445 ] [-0.5665671 1.45277988 -0.00229909 0.01524485 -0.25154658 -0.0026638 -0.77575213 0.62656276]] Sequential api : 단순한 네트워크(설계도) 구성 2022-12-01 16:15:56.198989: I tensorflow/core/platform/cpu_feature_guard.cc:193] This TensorFlow binary is optimized with oneAPI Deep Neural Network Library (oneDNN) to use the following CPU instructions in performance-critical operations: AVX AVX2 To enable them in other operations, rebuild TensorFlow with the appropriate compiler flags. Epoch 1/20 363/363 - 1s - loss: 1.6994 - mse: 1.6994 - val_loss: 0.7175 - val_mse: 0.7175 - 539ms/epoch - 1ms/step Epoch 2/20 363/363 - 0s - loss: 0.5974 - mse: 0.5974 - val_loss: 0.5673 - val_mse: 0.5673 - 175ms/epoch - 481us/step Epoch 3/20 363/363 - 0s - loss: 0.4876 - mse: 0.4876 - val_loss: 0.5041 - val_mse: 0.5041 - 175ms/epoch - 482us/step Epoch 4/20 363/363 - 0s - loss: 0.4344 - mse: 0.4344 - val_loss: 0.4738 - val_mse: 0.4738 - 168ms/epoch - 462us/step Epoch 5/20 363/363 - 0s - loss: 0.4118 - mse: 0.4118 - val_loss: 0.4507 - val_mse: 0.4507 - 163ms/epoch - 448us/step Epoch 6/20 363/363 - 0s - loss: 0.4021 - mse: 0.4021 - val_loss: 0.4586 - val_mse: 0.4586 - 166ms/epoch - 456us/step Epoch 7/20 363/363 - 0s - loss: 0.3933 - mse: 0.3933 - val_loss: 0.4442 - val_mse: 0.4442 - 167ms/epoch - 459us/step Epoch 8/20 363/363 - 0s - loss: 0.3900 - mse: 0.3900 - val_loss: 0.4425 - val_mse: 0.4425 - 167ms/epoch - 460us/step Epoch 9/20 363/363 - 0s - loss: 0.3787 - mse: 0.3787 - val_loss: 0.4348 - val_mse: 0.4348 - 158ms/epoch - 436us/step Epoch 10/20 363/363 - 0s - loss: 0.3769 - mse: 0.3769 - val_loss: 0.4296 - val_mse: 0.4296 - 163ms/epoch - 448us/step Epoch 11/20 363/363 - 0s - loss: 0.3703 - mse: 0.3703 - val_loss: 0.4184 - val_mse: 0.4184 - 160ms/epoch - 441us/step Epoch 12/20 363/363 - 0s - loss: 0.3795 - mse: 0.3795 - val_loss: 0.4290 - val_mse: 0.4290 - 164ms/epoch - 453us/step Epoch 13/20 363/363 - 0s - loss: 0.3670 - mse: 0.3670 - val_loss: 0.4152 - val_mse: 0.4152 - 163ms/epoch - 448us/step Epoch 14/20 363/363 - 0s - loss: 0.3631 - mse: 0.3631 - val_loss: 0.4146 - val_mse: 0.4146 - 157ms/epoch - 433us/step Epoch 15/20 363/363 - 0s - loss: 0.3559 - mse: 0.3559 - val_loss: 0.4062 - val_mse: 0.4062 - 162ms/epoch - 447us/step Epoch 16/20 363/363 - 0s - loss: 0.3542 - mse: 0.3542 - val_loss: 0.4232 - val_mse: 0.4232 - 161ms/epoch - 444us/step Epoch 17/20 363/363 - 0s - loss: 0.3509 - mse: 0.3509 - val_loss: 0.4114 - val_mse: 0.4114 - 163ms/epoch - 448us/step Epoch 18/20 363/363 - 0s - loss: 0.3522 - mse: 0.3522 - val_loss: 0.4051 - val_mse: 0.4051 - 161ms/epoch - 444us/step Epoch 19/20 363/363 - 0s - loss: 0.3474 - mse: 0.3474 - val_loss: 0.4032 - val_mse: 0.4032 - 162ms/epoch - 447us/step Epoch 20/20 363/363 - 0s - loss: 0.3511 - mse: 0.3511 - val_loss: 0.4021 - val_mse: 0.4021 - 170ms/epoch - 468us/step evaluate : [8.679112434387207, 8.679112434387207] 1/1 [==============================] - ETA: 0s 1/1 [==============================] - 0s 38ms/step 예측값 : [1.3117406 6.4562135 1.9024206] 실제값 : [2.114 1.952 2.418] Functional api : 이전 방법보다 복잡하고 유연한 네트워크(설계도) 구성 Epoch 1/20 363/363 - 1s - loss: 1.2802 - mse: 1.2802 - val_loss: 0.6156 - val_mse: 0.6156 - 625ms/epoch - 2ms/step Epoch 2/20 363/363 - 0s - loss: 0.4885 - mse: 0.4885 - val_loss: 0.4824 - val_mse: 0.4824 - 169ms/epoch - 467us/step Epoch 3/20 363/363 - 0s - loss: 0.4104 - mse: 0.4104 - val_loss: 0.4410 - val_mse: 0.4410 - 174ms/epoch - 479us/step Epoch 4/20 363/363 - 0s - loss: 0.3820 - mse: 0.3820 - val_loss: 0.4325 - val_mse: 0.4325 - 179ms/epoch - 493us/step Epoch 5/20 363/363 - 0s - loss: 0.3688 - mse: 0.3688 - val_loss: 0.4200 - val_mse: 0.4200 - 180ms/epoch - 496us/step Epoch 6/20 363/363 - 0s - loss: 0.3555 - mse: 0.3555 - val_loss: 0.3985 - val_mse: 0.3985 - 179ms/epoch - 493us/step Epoch 7/20 363/363 - 0s - loss: 0.3517 - mse: 0.3517 - val_loss: 0.4452 - val_mse: 0.4452 - 179ms/epoch - 493us/step Epoch 8/20 363/363 - 0s - loss: 0.3558 - mse: 0.3558 - val_loss: 0.3942 - val_mse: 0.3942 - 181ms/epoch - 498us/step Epoch 9/20 363/363 - 0s - loss: 0.3421 - mse: 0.3421 - val_loss: 0.3880 - val_mse: 0.3880 - 180ms/epoch - 496us/step Epoch 10/20 363/363 - 0s - loss: 0.3414 - mse: 0.3414 - val_loss: 0.3731 - val_mse: 0.3731 - 181ms/epoch - 499us/step Epoch 11/20 363/363 - 0s - loss: 0.3321 - mse: 0.3321 - val_loss: 0.3951 - val_mse: 0.3951 - 180ms/epoch - 495us/step Epoch 12/20 363/363 - 0s - loss: 0.3230 - mse: 0.3230 - val_loss: 0.4059 - val_mse: 0.4059 - 182ms/epoch - 501us/step Epoch 13/20 363/363 - 0s - loss: 0.3173 - mse: 0.3173 - val_loss: 0.3581 - val_mse: 0.3581 - 181ms/epoch - 500us/step Epoch 14/20 363/363 - 0s - loss: 0.3084 - mse: 0.3084 - val_loss: 0.3754 - val_mse: 0.3754 - 175ms/epoch - 481us/step Epoch 15/20 363/363 - 0s - loss: 0.3102 - mse: 0.3102 - val_loss: 0.3720 - val_mse: 0.3720 - 179ms/epoch - 492us/step Epoch 16/20 363/363 - 0s - loss: 0.3098 - mse: 0.3098 - val_loss: 0.3818 - val_mse: 0.3818 - 180ms/epoch - 495us/step Epoch 17/20 363/363 - 0s - loss: 0.3124 - mse: 0.3124 - val_loss: 0.3872 - val_mse: 0.3872 - 179ms/epoch - 492us/step Epoch 18/20 363/363 - 0s - loss: 0.3120 - mse: 0.3120 - val_loss: 0.3528 - val_mse: 0.3528 - 181ms/epoch - 500us/step Epoch 19/20 363/363 - 0s - loss: 0.2987 - mse: 0.2987 - val_loss: 0.3804 - val_mse: 0.3804 - 181ms/epoch - 498us/step Epoch 20/20 363/363 - 0s - loss: 0.2957 - mse: 0.2957 - val_loss: 0.3557 - val_mse: 0.3557 - 180ms/epoch - 495us/step evaluate : [16.428415298461914, 16.428415298461914] 1/1 [==============================] - ETA: 0s 1/1 [==============================] - 0s 27ms/step 예측값 : [1.5946229 7.950448 1.9005392] 실제값 : [2.114 1.952 2.418] Functional api 2 : 유연한 네트워크(설계도) 구성 - 일부는 짧은 경로, 일부는 긴 경로 Epoch 1/20 363/363 - 1s - loss: 1.3706 - mse: 1.3706 - val_loss: 0.7521 - val_mse: 0.7521 - 568ms/epoch - 2ms/step Epoch 2/20 363/363 - 0s - loss: 0.5440 - mse: 0.5440 - val_loss: 0.5946 - val_mse: 0.5946 - 175ms/epoch - 482us/step Epoch 3/20 363/363 - 0s - loss: 0.4517 - mse: 0.4517 - val_loss: 0.5073 - val_mse: 0.5073 - 179ms/epoch - 493us/step Epoch 4/20 363/363 - 0s - loss: 0.4054 - mse: 0.4054 - val_loss: 0.4790 - val_mse: 0.4790 - 174ms/epoch - 478us/step Epoch 5/20 363/363 - 0s - loss: 0.3885 - mse: 0.3885 - val_loss: 0.4965 - val_mse: 0.4965 - 178ms/epoch - 490us/step Epoch 6/20 363/363 - 0s - loss: 0.3938 - mse: 0.3938 - val_loss: 0.4684 - val_mse: 0.4684 - 179ms/epoch - 494us/step Epoch 7/20 363/363 - 0s - loss: 0.3985 - mse: 0.3985 - val_loss: 0.4717 - val_mse: 0.4717 - 176ms/epoch - 486us/step Epoch 8/20 363/363 - 0s - loss: 0.3713 - mse: 0.3713 - val_loss: 0.4339 - val_mse: 0.4339 - 185ms/epoch - 509us/step Epoch 9/20 363/363 - 0s - loss: 0.3765 - mse: 0.3765 - val_loss: 0.4699 - val_mse: 0.4699 - 176ms/epoch - 485us/step Epoch 10/20 363/363 - 0s - loss: 0.3695 - mse: 0.3695 - val_loss: 0.4360 - val_mse: 0.4360 - 178ms/epoch - 490us/step Epoch 11/20 363/363 - 0s - loss: 0.3630 - mse: 0.3630 - val_loss: 0.4580 - val_mse: 0.4580 - 178ms/epoch - 489us/step Epoch 12/20 363/363 - 0s - loss: 0.3522 - mse: 0.3522 - val_loss: 0.4168 - val_mse: 0.4168 - 180ms/epoch - 495us/step Epoch 13/20 363/363 - 0s - loss: 0.3490 - mse: 0.3490 - val_loss: 0.4381 - val_mse: 0.4381 - 179ms/epoch - 494us/step Epoch 14/20 363/363 - 0s - loss: 0.3465 - mse: 0.3465 - val_loss: 0.4542 - val_mse: 0.4542 - 176ms/epoch - 486us/step Epoch 15/20 363/363 - 0s - loss: 0.3799 - mse: 0.3799 - val_loss: 0.4859 - val_mse: 0.4859 - 179ms/epoch - 492us/step Epoch 16/20 363/363 - 0s - loss: 0.3653 - mse: 0.3653 - val_loss: 0.4265 - val_mse: 0.4265 - 177ms/epoch - 489us/step Epoch 17/20 363/363 - 0s - loss: 0.3388 - mse: 0.3388 - val_loss: 0.4031 - val_mse: 0.4031 - 187ms/epoch - 516us/step Epoch 18/20 363/363 - 0s - loss: 0.3266 - mse: 0.3266 - val_loss: 0.4032 - val_mse: 0.4032 - 177ms/epoch - 488us/step Epoch 19/20 363/363 - 0s - loss: 0.3232 - mse: 0.3232 - val_loss: 0.4070 - val_mse: 0.4070 - 180ms/epoch - 496us/step Epoch 20/20 363/363 - 0s - loss: 0.3211 - mse: 0.3211 - val_loss: 0.4451 - val_mse: 0.4451 - 177ms/epoch - 488us/step evaluate : [12.521573066711426, 12.521573066711426] 1/1 [==============================] - ETA: 0s 1/1 [==============================] - 0s 29ms/step 예측값 : [1.8839229 7.020034 1.8509595] 실제값 : [2.114 1.952 2.418]

mse와 val_mse의 시각화 Sequential api 사용

Functional api

Functional api 2 'TensorFlow' 카테고리의 다른 글

TensorFlow 기초 16 - AutoMPG dataset으로 자동차 연비 예측 모델(표준화) (0) 2022.12.02 TensorFlow 기초 15 - 활성화 함수, 학습 조기 종료 (0) 2022.12.02 다중선형회귀 예제 - 자전거 공유 시스템 분석 (0) 2022.12.01 다중선현회귀모델 예제 - 주식 데이터로 예측 모형 작성. 전날 데이터로 다음날 종가 예측(train/test split, validation_split) (0) 2022.12.01 TensorFlow 기초 13 - 다중선형회귀모델(scaling) - 정규화, 표준화 validation_split (0) 2022.11.30